An Illinois federal judge granted summary judgment to Google in a case alleging that its use of facial recognition software in Google Photos violated Illinois’ Biometric Information Privacy Act (“BIPA”), citing Plaintiffs’ failure to establish a concrete injury sufficient to confer Article III standing.

Background

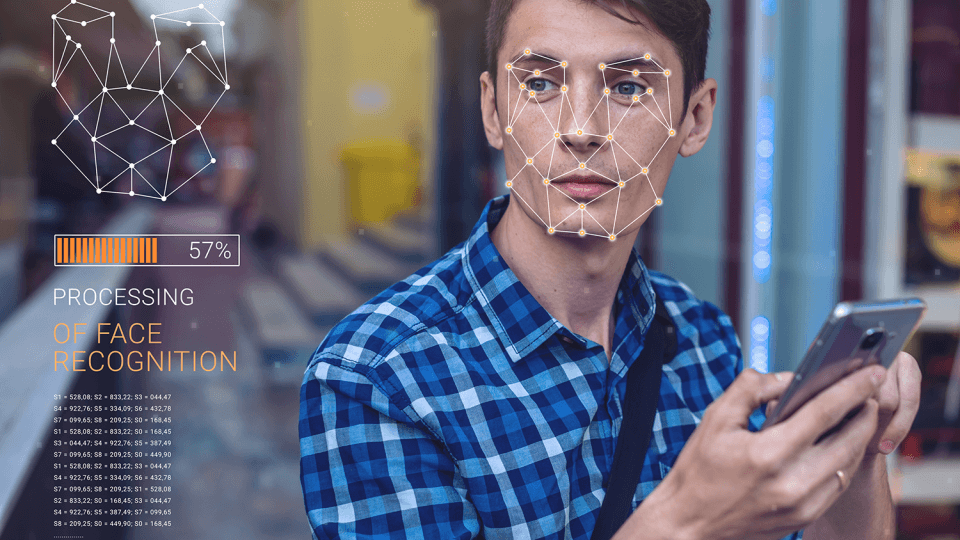

Google Photos is a cloud-based service which detects images of faces and creates face templates when a user uploads a photo. Plaintiffs argued that the templates are covered under BIPA’s “biometric identifiers,” and that, therefore, Google should have obtained their consent prior to creating such templates. After some discovery, Google sought summary judgment on the ground that the Plaintiffs did not show that they suffered a concrete injury sufficient to satisfy Article III standing. Applying the framework set out by the Supreme Court in Spokeo,the court separately analyzed Google’s retention and collection of face scans and found that neither constituted injury in fact in this case sufficient to confer standing.

Retention of Face Scans

Section 15 of BIPA requires any private entity in possession of biometric identifiers or biometric information to provide a written policy establishing a retention schedule and destruction guidelines for such identifiers and information and to obtain consent for its collection. The Plaintiffs argued that Google violated BIPA by not providing the required disclosures or obtaining consent. The court, citing the Seventh Circuit’s holding in Gubala reaffirmed that “the retention of an individual’s private information, on its own, is not a concrete injury sufficient to satisfy Article III.” In doing so, the court noted that Plaintiffs’ “face templates have not been shared with other Google Photos users or with anyone outside of Google itself; there has not been any unauthorized access to the accounts or data associated with their face templates or face groups; and hackers have not obtained their data.”

The court also rejected Plaintiffs’ argument that recent breaches related to Google’s Google+ offering established a future risk of harm, finding that Plaintiffs failed to offer enough evidence that breaches in a different product created a substantial risk that a future harm will occur with respect to Google Photos.

Collection of Face Scans

The court next looked at whether the Plaintiffs suffered a concrete injury from Google’s creation of their face templates without their knowledge. The court examined and disagreed with Patel v. Facebook Inc., in which the California district court held that plaintiffs had sufficiently alleged a concrete injury (i.e., Facebook’s failure to obtain users’ consent to create and store face templates from uploaded photos) to satisfy Article III. The Patel court’s finding of standing stood on two pillars: “the risk of identity theft arising from the permanency of biometric information, as described by the Illinois legislature, and the absence of in-advance consent to Facebook’s collection of the information.” Departing from Patel, the court found that there was no evidence of substantial risk that the creation of the face templates would result in identity theft, and that there was no sufficient legislative finding that explains why an absence of consent would give rise to an injury independent of such risk. The court also pointed to several other factors to support lack of injury in fact with respect to the collection of face scans: (i) given that people expose their faces to the general public, there is no additional intrusion of privacy to obtain a likeness of it, unlike the physical placement of a finger on a scanner or other object, or the exposure of a sub-surface part of the body like a retina; (ii) the creation of face templates would not be highly offensive because the templates are based on something that is visible to the ordinary eye; (iii) Google only used the facial images to create face templates that organize Plaintiffs’ photographs in private Google Photos accounts; and (iv) Google has not commercially exploited Plaintiffs’ faces or the face templates they created.

Conclusion

In the majority of recently-decided cases (Patel notwithstanding), courts have found the collection of biometric information without consent can constitute an injury-in-fact only if the biometric information is monetized or disclosed to a third-party without obtaining the subject’s consent or used for any purpose other than to support the product offering to the user. The court’s decision in Rivera follows a similar path and reinforces that an injury-in-fact requires more than a bare procedural violation of the Act.