The European Commission has launched the AI Act Service Desk, a single information platform bundling a new Compliance Checker, an official AI Act Explorer, a timeline, and an online helpdesk. The aim is to support businesses in understanding, implementing, and adhering to the AI Act (“the Act”) The Act itself entered into force on 1 Aug 2024 and rolls out in phases through 2 Aug 2027, so practical guidance is arriving just in time for teams scoping obligations for 2026–2027. It’s hosted by the Commission (DG CONNECT) and explicitly marked beta, so we should expect iterations and more depth over time.

We’ve tested the tools for you, and provide our thoughts below.

Compliance Checker: A promising start, but still light on decision support.

What Works Well

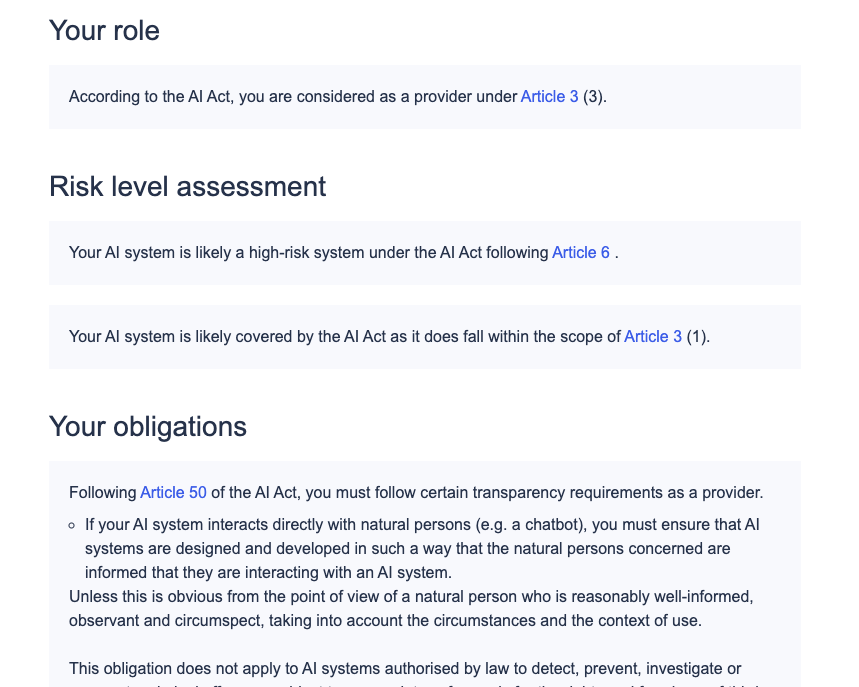

If you have a reasonably detailed understanding of your AI model or system, the checker provides a streamlined review via explicit steps, that yield a clear outcome with official links. When you complete the walkthrough, the tool points you to the exact articles that likely apply (e.g., high‑risk classification in Article 6, or GPAI obligations in Chapter V) and summarises key points. This is exactly what an official checker should do.

Where it Currently Falls Short (Based on Our Testing)

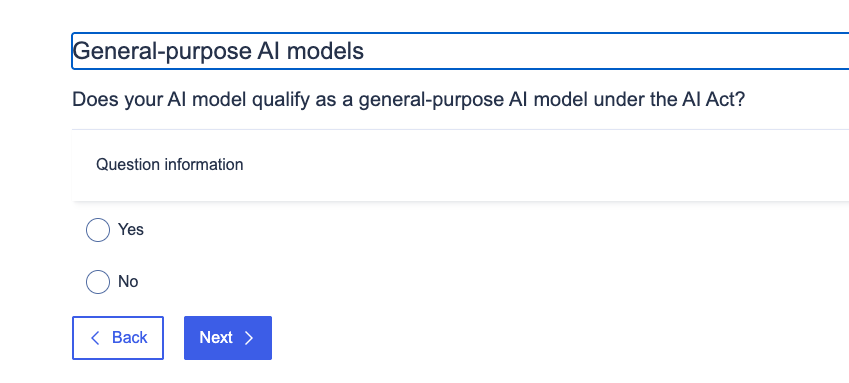

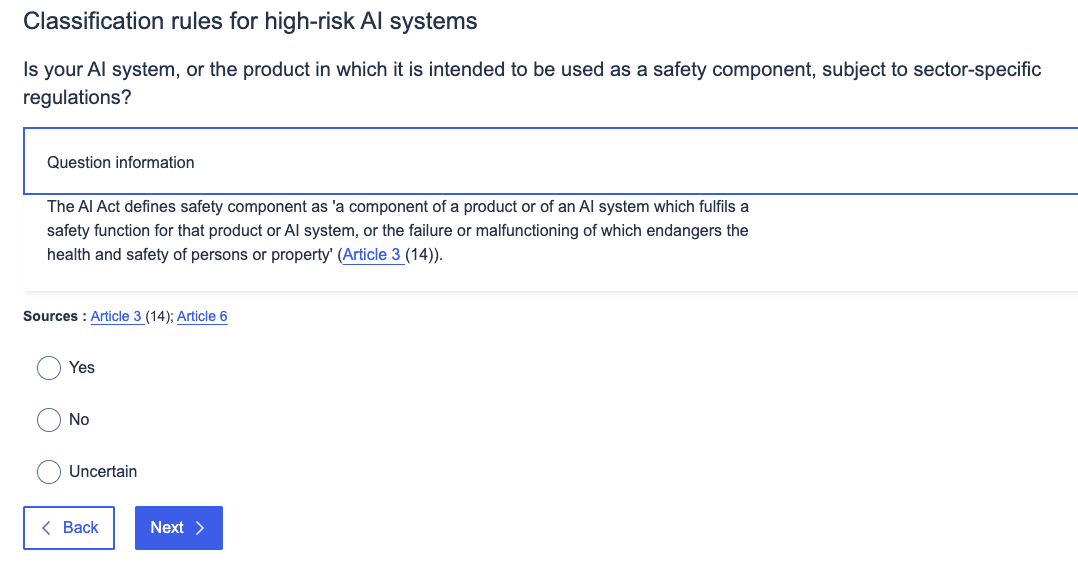

However, if the user is uncertain as to how the model or system fits within the definitions, or as to the functions or capabilities, there will be ambiguity at some core questions that the checker is unable to help resolve. It asks you to self-identify roles (provider vs. deployer etc.) and determine whether you’re dealing with a GPAI model or system, but in our experience with clients, these are exactly the threshold questions they need most assistance in determining. In particular, for many multi‑party stacks (providers who are also deployers; deployers with multiple providers), this is the whole question.

For example, the tool doesn’t help a user determine how to answer the above (by, for example, pointing them toward Annex/Guidelines on the scope of operations for GPAI models…which points out this is based on computing power v. use case.). This is a fundamental question, especially since the difference between GPAI “model” and GPAI “system” is significant in determining where (what category) and how the AI product should be assessed, as it clarifies scope and legal obligations under the Act.

If you are uncertain if your AI system is covered by the Act at all, you are asked the following:

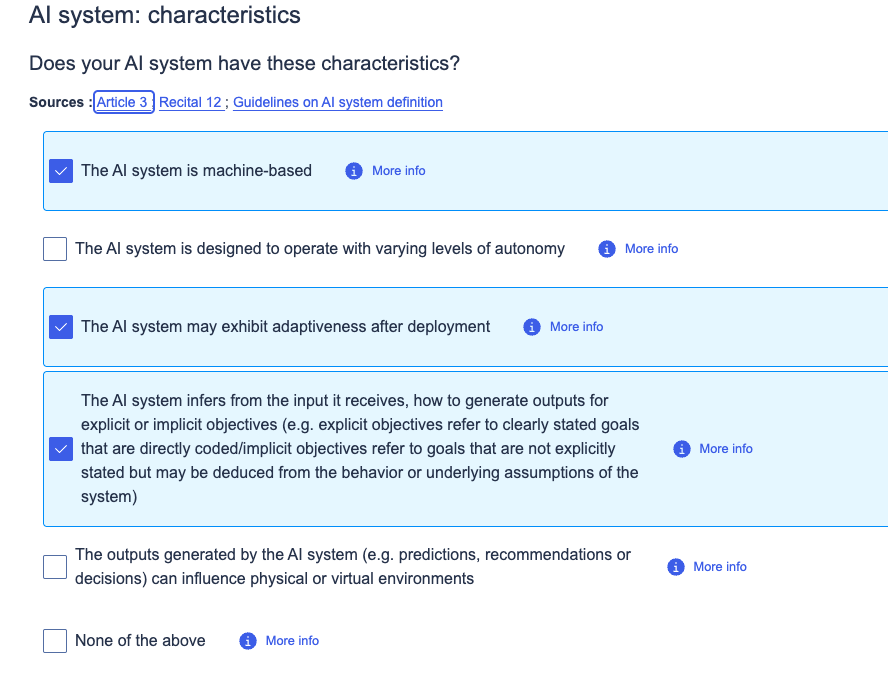

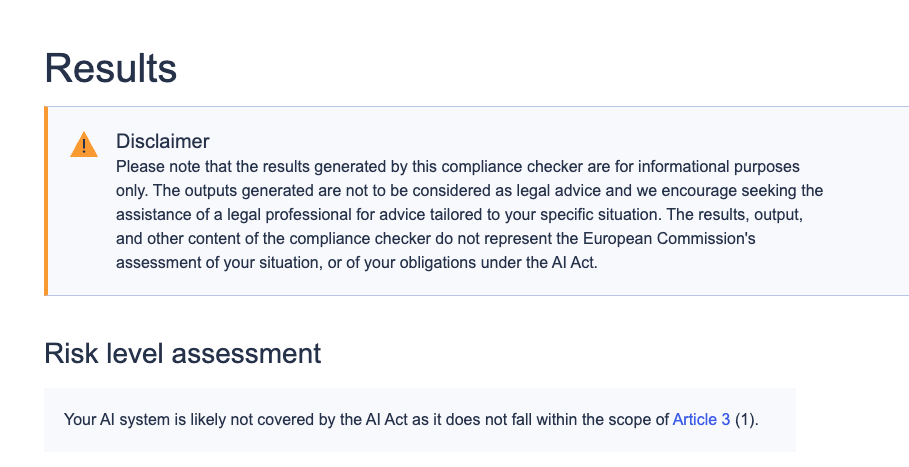

And if – as here – you do not check all the boxes that are part of the Act’s definitional language, you receive the following conclusion:

This question apparently requires that all 5 be checked to qualify as an AI system under the Act (the Risk level assessment above was triggered if I only checked 3), but it’s not hard to imagine models that either may not clearly check one of the boxes, or at least where the user may be unsure as to degree (of autonomy, for example) required. People may err on the side of caution and check boxes which then lead to their need to comply; but others may also choose to interpret more narrowly and perhaps select “out” of scope. The list reflects the exact language of the law, and requires all be confirmed, but the lack of nuance may leave non-lawyer users unsure of how to respond.

While the “i” popups were helpful to some extent, they did not in all cases clear up the confusion, since they’re largely limited to also citing official text rather than clarifying it.

In other cases, you can come to this conclusion indirectly. If you already know that your system falls into one of the high-risk categories, the tool will then tell you that you are a provider, as below.

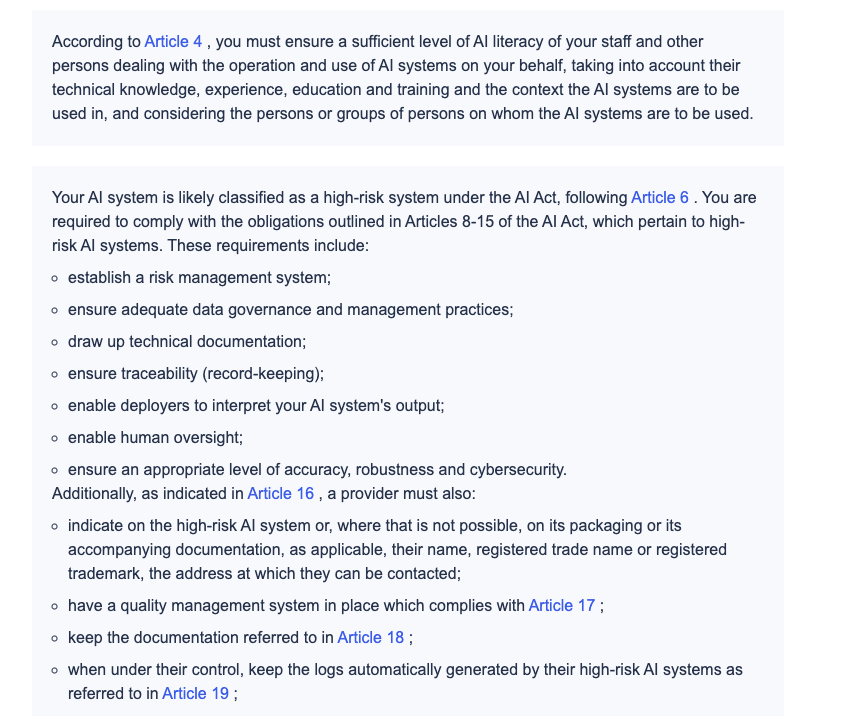

Where we answered yes, and indicated an attribute under Annex I already covered by other harmonization requirements, we were given the following several useful conclusions, summaries and references for further compliance details.

However, as with elsewhere, all the links are to the relevant articles of the Act. It would have been helpful if there were further info included on how to comply with those obligations.

There are, however, interesting new examples and side bar info provided in some of the “i” popups which provide additional, helpful context and clarifications – e.g., the scraping of voice samples is ok. Scraping without use of AI, ok. Scraping for AI model training where persons not identified – also ok. Scraping for AI systems to create images (deep fakes?) also ok. And labeling or filtering of lawfully acquired biometric datasets by protected characteristics is okay for purposes of verifying sufficiently diverse data.

What Would Make it (Near Term) Great:

- Built‑in resources to assist users when they are unsure about their exact role (provider / deployer / importer / distributor) using value‑chain outlines and common edge cases (fine‑tuning a third‑party model, or embedding vs. exposing).

- More articulated guidance distinguishing GPAI models vs. systems, with pointers to official guidelines and Annex criteria. (Note: Article 6(5) foresees Commission guidelines with practical examples by 2 Feb 2026. Hopefully, the checker will link to those the moment they publish.)

- Holistic anticipation of user next steps, such as a short “starter pack” tailored to the outcome. Key items for what evidence to gather to demonstrate compliance, typical teams involved in the process, and where templates will live once available.

Other Tools:

Separate from the Compliance Checker, the EU also published their own Explorer tool for navigating within the Act. It includes a clear table of contents, article‑level summaries, and a curated list of relevant recitals for each article. It keeps you inside the official ecosystem and links out to annexes, the timeline, and the Service Desk if you need to raise a question.

The official Explorer is an incredibly useful access point and pathway to navigate the full text of the law:

- One‑paragraph summaries at the top of articles (e.g., Article 6) help non‑lawyers’ orient context before diving into detailed text.

- Relevant recitals are listed per article, which is enough for most interpretive lookups.

- Direct annex access (I–XIII) keeps the high‑risk lists, documentation requirements, and GPAI criteria one click away.

- The integrated timeline gives concrete dates (e.g., 2 Aug 2026 for most high‑risk rules, 2 Aug 2027 for embedded systems), which are useful when planning compliance strategies.

While this AI Act Explorer is good, there are others that have been available for some time now (not affiliated with the EU) that have additional features which would add value here as well. More granulated connections to other articles, with recitals tagged per paragraph throughout (instead of all grouped at the bottom) and embedded links to noted connective references within the text of the Act (live link anytime “Annex III” is mentioned, for example), would be even more convenient and helpful.

The EU platform search function yields results, but without overviews or “snipped” text, specifically to note where the term search for is used, as is the case with some tools. The EU might consider adding these and other additional small details that make the pages feel more intuitive, and the overall experience more effortless and complete. Regardless, this is a useful tool now available from the official source.

National Resources & Resources Tabs: How helpful are they?

Finally, the site offers two other tabs worth noting:

National Resources

This is a directory of Member State contacts and materials with filters for market surveillance authorities, regulatory sandboxes, national helpdesks, guidelines, and trainings. Its value may somewhat depend on your footprint: if you deploy in multiple EU countries, this hub saves time hunting for the right national point of contact. Coverage is uneven (as expected this early), but examples like the Netherlands’ business‑facing site and guide are already listed. This tab will doubtless become more useful over time.

Resources

This section offers a central library for legislation, policy documents, guidelines, templates, webinars, and fact sheets. It’s still filling out, but you can already find timely items. For instance, a new note on GPAI providers sending documents to the AI Office (dated 20 Oct 2025) is included. We expect this to become the “how‑to” shelf that the Checker’s results page can deep‑link into.

Recommendations:

Overall, these are valuable new resources that are likely to get even better with time and further regulatory development. Treat the Checker as a starting review, not a decision engine, and consult in-house or outside counsel for a more detailed review of the high-risk tools in particular. Use the Checker to identify the right articles, then sanity‑check with the Explorer and, when needed, ask the AI Act Service Desk about your specific scenario.

If you’re unsure about specifics around organizational roles or model scope, document your reasoning. Article 6 anticipates official guidance and requires documentation when you conclude a listed use is not high‑risk, and this is good practice even outside Article 6. Finally, be sure to keep relevant dates front‑and‑center. The timeline clarifies what goes into effect when so that you can align internal readiness to the 2026 and 2027 checkpoints.